A New Twitter Lie Detector Could Change Everything

What if we could tell instantly when someone tweeted a hoax? Almost enough to drive you back to Twitter, eh?

Go on Twitter now, search #breakingnews and see how much comes up. Everything from Adam Driver’s rumoured Star Wars gig to a moving truck being spotted at Katy Perry’s house, post the John Mayer break-up. Twitter is also a key driver of referral traffic for major news outlets; according to Guardian News & Media CEO, Andrew Miller, 10% of the newspaper’s traffic now comes from social media: “Twitter is the fastest way to break news now.”

Like anything that seems too good to be true, there’s a catch: pranksters are everywhere, false photos and facts can go unverified, death hoaxes can be spread at speed, and audiences treat RTs as truth, when they’re anything but. There are an unprecedented amount of tools available to anyone who’s looking to verify a tweet, but only the best journalists actually use them.

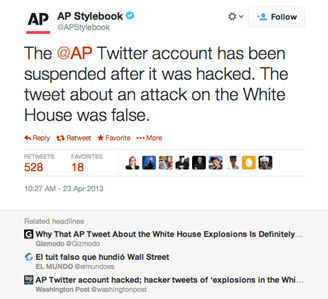

Take last year’s Boston Bombings: after the two bombs exploded, a “media frenzy” kicked-off on Twitter — and in the rush to break the story first, the media “got it wrong.” In the same month, @APStylebook was hacked, after it had falsely reported that the White House was under attack. NO BIGGIE.

A few weeks back, Rebecca Onion wrote a piece for Slate examining the hugely popular phenomenon of ‘History In Pictures’-style Twitter accounts, which publish unverified, unreferenced, unattributed photos of famous people from the past, often with wrong or misleading captions — leaving actual historians everywhere with their heads in their hands.

What if there was a way of stopping these stories from circulating before they go viral?

Introducing ‘Pheme’

A team of European researchers is developing new software to try and solve the problem. Pheme will look at everything from the tweeting history of accounts to the use of emotive language in an attempt to stop false rumours in their tracks.

“We’re not trying to police the Internet,” says senior researcher Kalina Bontcheva. Rather, the software will send journalists information on a dashboard, which they will “monitor to determine the legitimacy of news.”

The project is led by the University of Sheffield, and was sparked by the 2011 London Riots. Dr. Bontcheva said, “There was a suggestion after the 2011 riots that social networks should have been shut down, to prevent the rioters using them to organise. But social networks also provide useful information – the problem is that it all happens so fast and we can’t quickly sort truth from lies.”

Pheme aims to categorise online content into four main categories: “speculation – such as whether interest rates might rise; controversy – as over the MMR vaccine; misinformation, where something untrue is spread unwittingly; and disinformation, where it’s done with malicious intent.”

As it stands, manually categorising this content is time consuming and difficult. Fingers crossed the software is available soon.

–

Katie Booth is Junkee’s editorial intern. She tweets @kboo2344